Welcome to my website!

About Me

My name is Niklas and I am pursuing a PhD degree under the supervision of Jan Peters at the Intelligent Autonomous Systems Group at TU Darmstadt.

Generally speaking, I am interested in all sorts of algorithms and methods enabling and advancing Robotic Manipulation. In the past years, I have especially focused on the field of Robot Learning, i.e., the intersection between Robotics and Machine Learning. Besides trying to make the ideas work in simulation, I have also invested substantial time in making the methods work on several real-world robotic platforms.

- Robotics

- Dexterous Manipulation

- Tactile Sensing

- Imitation / Reinforcement Learning

- Graph-based Representations

-

BSc in Electrical Engineering and Information Technology, 2017

ETH Zurich

-

MSc in Robotics, Systems & Control, 2020

ETH Zurich

Featured Publications

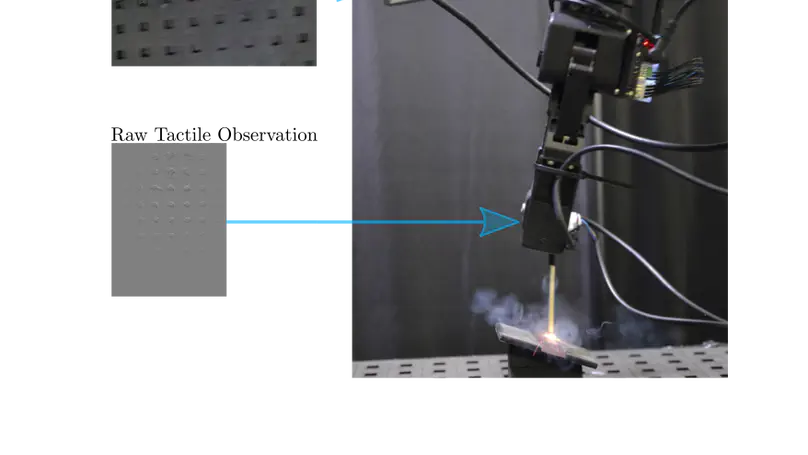

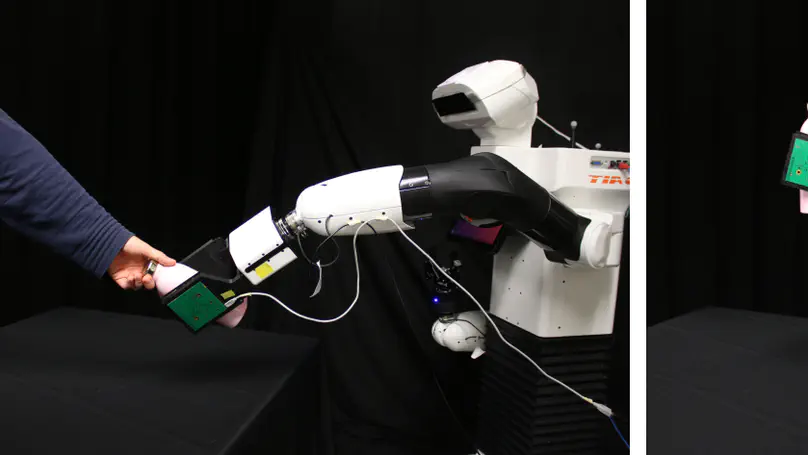

The field of robotic manipulation has advanced significantly in the last years. At the sensing level, several novel tactile sensors have been developed, capable of providing accurate contact information. On a methodological level, learning from demonstrations has proven an efficient paradigm to obtain performant robotic manipulation policies. The combination of both holds the promise to extract crucial contact-related information from the demonstration data and actively exploit it during policy rollouts. However, despite its potential, it remains an underexplored direction. This work therefore proposes a multimodal, visuotactile imitation learning framework capable of efficiently learning fast and dexterous manipulation policies. We evaluate our framework on the dynamic, contact-rich task of robotic match lighting - a task in which tactile feedback influences human manipulation performance. The experimental results show that adding tactile information into the policies significantly improves performance by over 40%, thereby underlining the importance of tactile sensing for contact-rich manipulation tasks.

Best Paper Award in the RSS 2024 Workshop on Priors4Robots

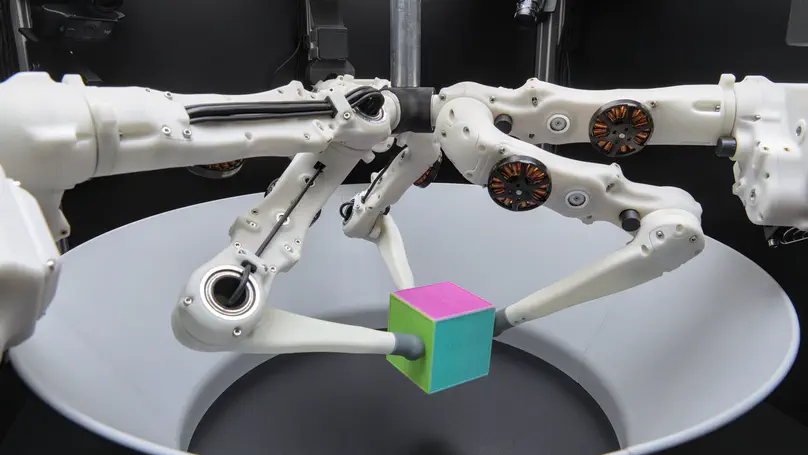

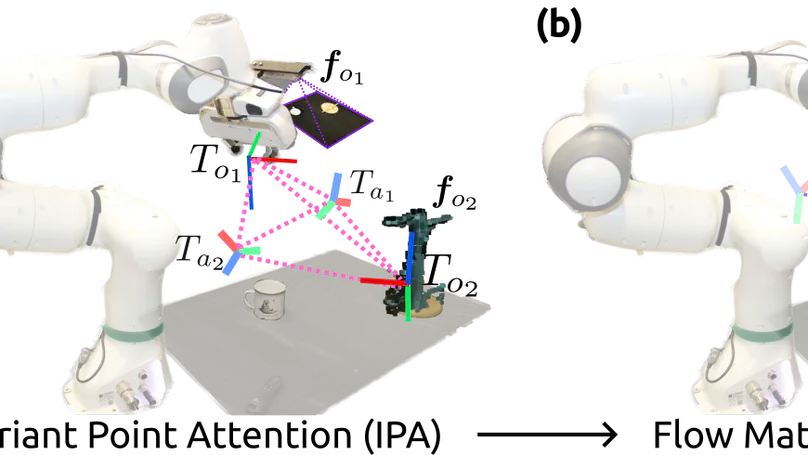

This paper introduces a novel policy class combining Flow Matching with SE(3) Invariant Transformers for efficient, equivariant, and expressive robot learning from demonstrations. We showcase the performance of the method across simulation and real robot environment considering low dimensional as well as high dimensional real-world observations.

This work proposes a new event-based optical tactile sensor called Evetac. The main motivation for investigating event-based optical tactile sensors is their high spatial and temporal resolutions and low data rates. Benchmarking experiments demonstrate Evetac’s capabilities of sensing vibrations up to 498 Hz, reconstructing shear forces, and significantly reducing data rates compared to RGB optical tactile sensors. Moreover, Evetac’s output provides meaningful features for learning data-driven slip detection and prediction models. The learned models form the basis for a robust and adaptive closed-loop grasp controller capable of handling a wide range of objects. We believe that fast and efficient event-based tactile sensors like Evetac will be essential for bringing human-like manipulation capabilities to robotics.

Finalist for IROS Best Paper Award on Mobile Manipulation

This work investigates the effectiveness of tactile sensing for the practical everyday problem of stable object placement on flat surfaces starting from unknown initial poses. We devise a neural architecture that estimates a rotation matrix, resulting in a corrective gripper movement that aligns the object with the placing surface for the subsequent object manipulation. We compare models with different sensing modalities, such as force-torque, an external motion capture system, and two classical baseline models in real-world object placing tasks with different objects. The experimental evaluation of our placing policies with a set of unseen everyday objects reveals significant generalization of our proposed pipeline, suggesting that tactile sensing plays a vital role in the intrinsic understanding of robotic dexterous object manipulation.

Best Paper Award in the Geometric Representations Workshop at ICRA 2023

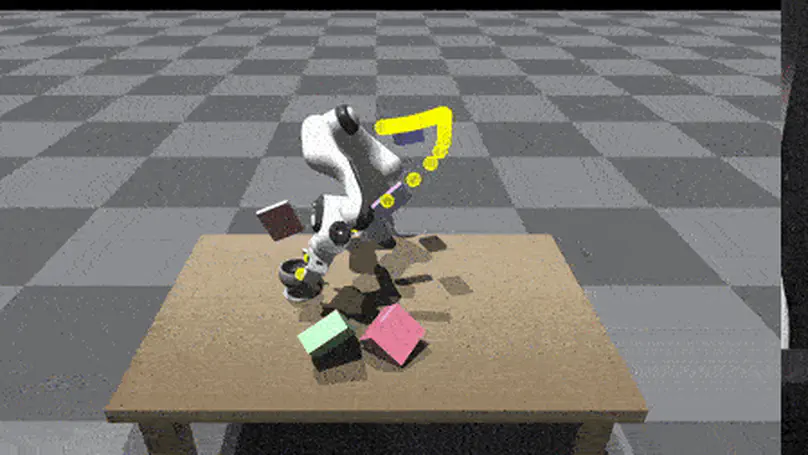

We propose learning task-space, data-driven cost functions as diffusion models. Diffusion models represent expressive multimodal distributions and exhibit proper gradients over the entire space. We exploit these properties for motion optimization by integrating the learned cost functions with other costs in a single objective function, and optimize all of them jointly by gradient descent.

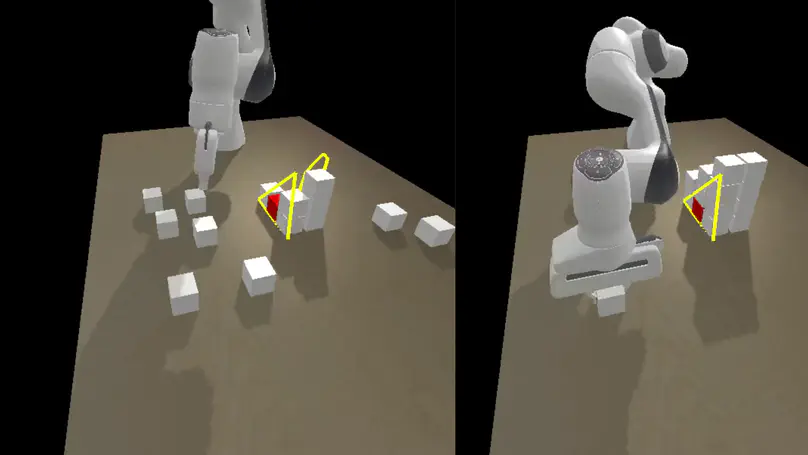

We propose a novel hybrid method for Robot Assembly discovery that is based on a combination of Mixed Integer Programming and a graph-based reinforcement learning agent.

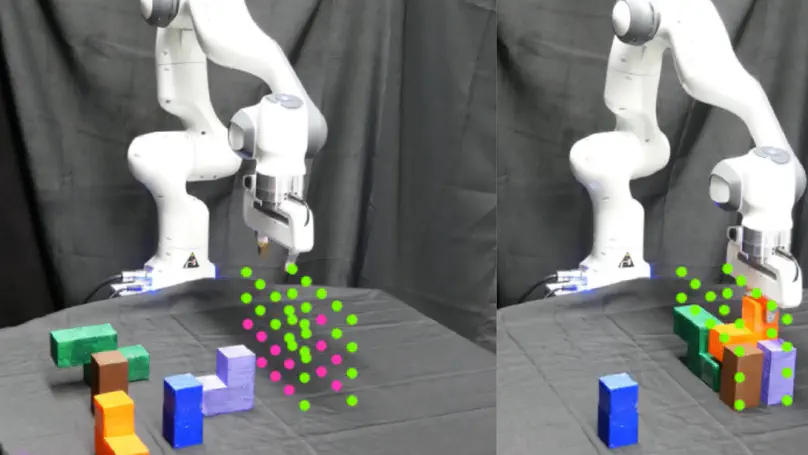

We propose a novel method for learning to assemble arbitrary structures from scratch. The transformer-like graph-based neural network jointly decides which blocks to use and how to assemble the structure with the robot-in-the-loop.