Graph-based Reinforcement Learning meets Mixed Integer Programs: An application to 3D robot assembly discovery

Abstract

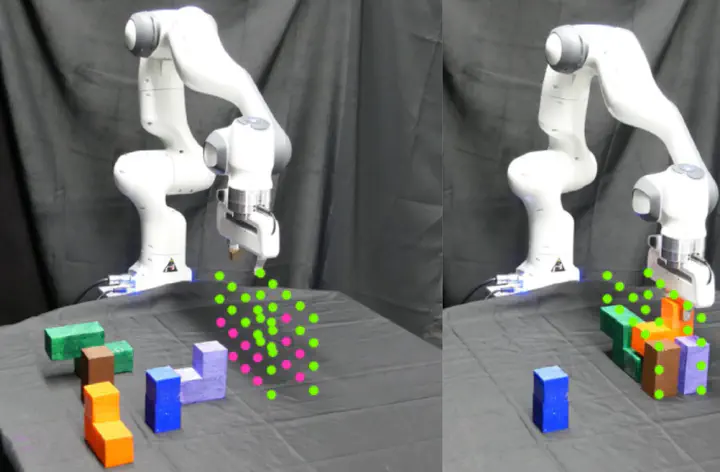

Robot assembly discovery is a challenging problem that lives at the intersection of resource allocation and motion planning. The goal is to combine a predefined set of objects to form something new while considering task execution with the robot-in-the-loop. In this work, we tackle the problem of building arbitrary, predefined target structures entirely from scratch using a set of Tetris-like building blocks and a robotic manipulator. Our novel hierarchical approach aims at efficiently decomposing the overall task into three feasible levels that benefit mutually from each other. On the high level, we run a classical mixed-integer program for global optimization of block-type selection and the blocks’ final poses to recreate the desired shape. Its output is then exploited to efficiently guide the exploration of an underlying reinforcement learning (RL) policy. This RL policy draws its generalization properties from a flexible graph-based representation that is learned through Q-learning and can be refined with search. Moreover, it accounts for the necessary conditions of structural stability and robotic feasibility that cannot be effectively reflected in the previous layer. Lastly, a grasp and motion planner transforms the desired assembly commands into robot joint movements. We demonstrate the performance of the proposed method on a set of competitive simulated robot assembly discovery environments and report performance and robustness gains compared to an unstructured end-to-end approach.