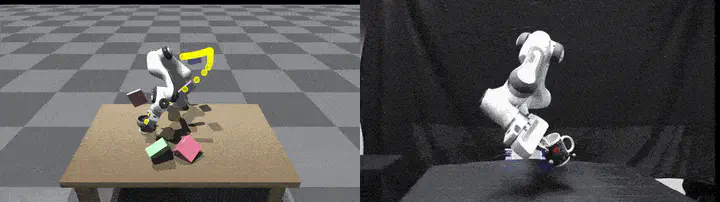

SE(3)-DiffusionFields: Learning smooth cost functions for joint grasp and motion optimization through diffusion

Abstract

Multi-objective high-dimensional motion optimization problems are ubiquitous in robotics and highly benefit from informative gradients. To this end, we require all cost functions to be differentiable. We propose learning task-space, data-driven cost functions as diffusion models. Diffusion models represent expressive multimodal distributions and exhibit proper gradients over the entire space. We exploit these properties for motion optimization by integrating the learned cost functions with other potentially learned or hand-tuned costs in a single objective function, and optimize all of them jointly by gradient descent. We showcase the benefits of the joint optimization in a set of complex grasp and motion planning problems and compare against hierarchical approaches that decouple the grasp selection from the motion optimization.